Over the past few years there has been increasing friction between a subset of cryonicists, and people in the Transhumanist (TH) and Technological Singularity communities, most notably those who follow the capital N, Nanotechnology doctrine.[1, 2] Or perhaps more accurately, there has been an increasing amount of anger and discontent on the part of some in cryonics over the perceived effects these “alternate” approaches to and views of the future have had on the progress of cryonics. While I count myself in this camp of cryonicists, I think it’s important to put these issues into perspective, and to give a first-hand accounting of how n(N)anotechnology and TH first intersected with cryonics.

Over the past few years there has been increasing friction between a subset of cryonicists, and people in the Transhumanist (TH) and Technological Singularity communities, most notably those who follow the capital N, Nanotechnology doctrine.[1, 2] Or perhaps more accurately, there has been an increasing amount of anger and discontent on the part of some in cryonics over the perceived effects these “alternate” approaches to and views of the future have had on the progress of cryonics. While I count myself in this camp of cryonicists, I think it’s important to put these issues into perspective, and to give a first-hand accounting of how n(N)anotechnology and TH first intersected with cryonics.

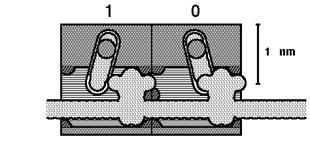

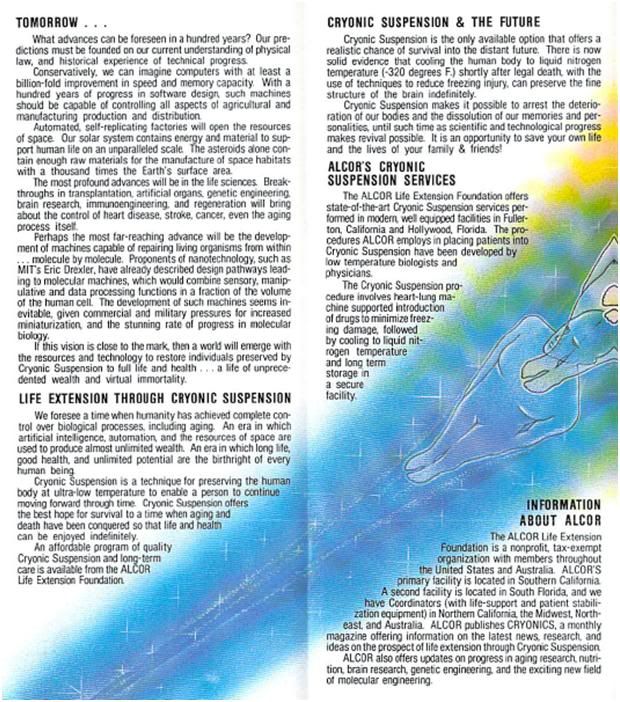

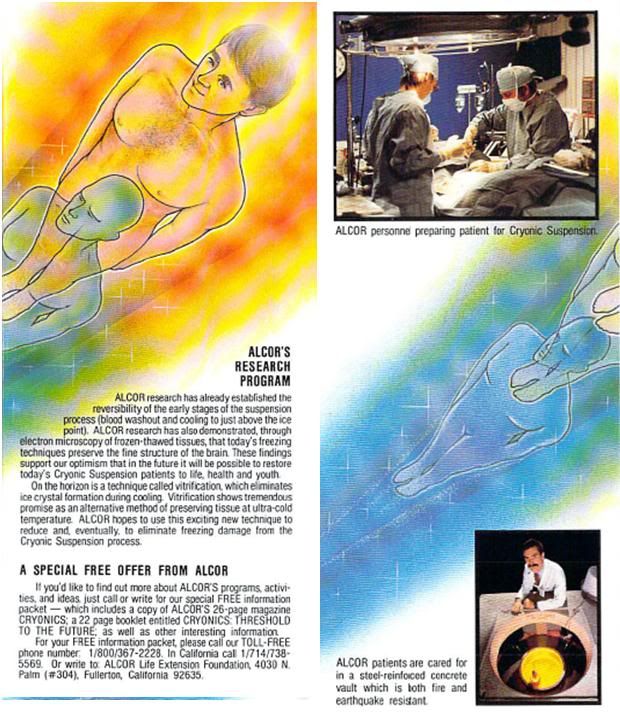

At left, the cover the first cryonics brochure to use the idea of nanotechnological cell repair as a rescue strategy for cryopatients. The brochure was sent out as a mass mailing (~10,000 copies) to special interest groups deemed of relevance in 1984.

It is important to understand that the nanotechnology folks didn’t come to cryonicists and hitch a ride on our star. Quite the reverse was the case. Eric Dexler was given a gift subscription to Cryonics magazine by someone, still unknown, well before the publication of Engines of Creation.[3] When he completed his draft of Engines, which was then called The Future by Design, he sent out copies of the manuscript to a large cross-section of people – including to us at Alcor. I can remember opening the package with dread; by that time we were starting to receive truly terrible manuscripts from Alcor members who believed that they had just written the first best selling cryonics novel. These manuscripts had to be read, and Hugh Hixon and I switched off on the duty of performing this uniformly onerous task.

At left, Eric Drexler, circa the 1980s.

It was my turn to read the next one, so as soon as I saw there was a manuscript in the envelope, I put my legs up on my desk and started reading, hoping to “get it over with” before too much of the day had escaped my grasp. I was probably 5 or 10 pages into the Velobound book, when I uttered an expletive-laced remark to the effect that this was a really, really important manuscript, and one that was going to transform cryonics, and probably the culture as a whole. After Hugh read it, he concurred with me.

At right, Brian Wowk, Ph.D.

Drexler was soliciting comments, and he got them – probably several hundred pages worth from Hugh, Jerry Leaf and I. And he listened to those comments – in fact, a robust correspondence began. I think that the ideas in Eric’s book, and to large extent the way he presented them were overwhelmingly positive, and that they were very good for cryonics, in the bargain. As just one small example, a young computer whiz kid, who was writing retail point-of-sale programs in Kenora, Canada, was recruited mostly on the basis of Drexler’s scenarios for nanotechnology and cell and tissue repair. His name, by the way, was Brian Wowk. As an amusing aside, the brochure that recruited Brian to cryonics is reproduced at the end of this article; we thought it was cutting edge marketing at the time (hokey though it was, it was indeed cutting edge, in terms of content, if not artistic value).

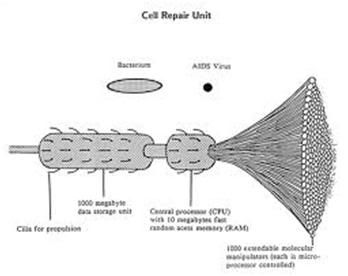

At left, one of the first conceptualizations of what a nanoscale cell repair machine might look like. This drawing was made by Brian Wowk and appeared the article, “Cell Repair Technology,” Cryonics Magazine, July 1988; Alcor Foundation, pp. 7, 10. More sophisticated images were to follow (see below).

Engines and Drexler’s subsequent book Nanosystems,[4] explored one discrete, putative pathway to achieving nanoscale engineering, and to applying it to a wide variety of ends. That was and is a good thing, and both books were visionary and scientifically and technologically important, as well. Drexler never claimed that his road was the only road, and for the record, neither did we (Alcor). What was exciting and valuable about those books and the ideas they contained was that they opened the way to exploring the kinds of technology that would likely be required to rescue cryopatients. Even more valuably, they demonstrated that such technologies were, in general (and in principle) possible, and that they did not violate physical law. That was enormously important – in no small measure because they did so by providing a level of detail that was previously largely missing in cryonics. Yes, prior to this time Thomas Donaldson had explored biologically-based repair ideas[5] (as had I[6]), but these ideas were more nebulous and they lacked the necessary detail.

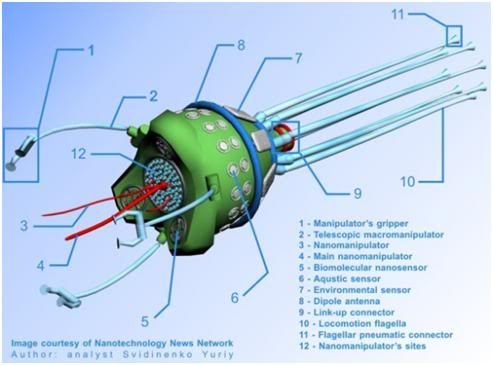

The idea of cell repair machines has now entered mainstream science and culture, as is apparent in the illustration above, by artist Svidinenko Yuriy in 2008 (http://www.nanotech-now.com/Art_Gallery/svidinenko-yuriy.htm).

If nanotechnology had stayed nanotechnology, instead of becoming Nanotechnology, then it would all have been to the good. By way of analogy, I’m not irrevocably wed to the idea of cryopreservation. I have no emotional investment in low temperatures and on the contrary, the need to maintain such an extreme and costly environment without any break or interruption, scares the hell out of me. I’d much prefer a preservation approach that has been validated over ~45 million years, such as the demonstrated preservation of cellular ultrastructure in glasses at ambient temperature, in the form plant and animal tissues preserved in amber.

Buthidae: Scorpiones in Dominican Amber ~25-40 Million Years Old [Poinar G and Poinar R. The Quest for Life in Amber, Addison-Wesley, Reading, MA, 1994.]

Buthidae: Scorpiones in Dominican Amber ~25-40 Million Years Old [Poinar G and Poinar R. The Quest for Life in Amber, Addison-Wesley, Reading, MA, 1994.]

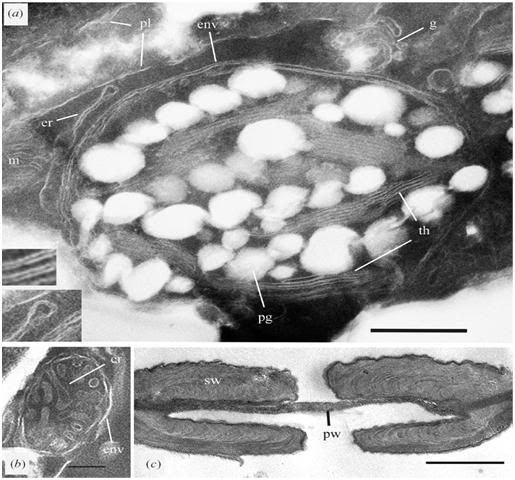

Plant Cell Ultra-structure in Baltic Amber ~45 Million Years Old: Transmission electron micrographs of ultrathin cross-sections of the amber cypress tissue. (a) Section of a parenchyma cell with a chloroplast, the double membrane envelope (env), thylakoid membranes (th) and large plastoglobuli (pg), membranes of the endoplasmic reticulum (er), the golgi aparatus (g), the plasmalemma (pl) and part of a mitochondrion (m). (b) Crosssection of a mitochondrion with the outer envelope (env) and cristae (cr). (c) Cross-section of a double-bordered pit from a tracheid-like cell with fine structures of the primary and secondary cell walls. Size bars: (a) 500 nm; (b) 200 nm; (c) 1 mm.Cypress [Proc. R. Soc. B272, 121–126 (2005)]

Plant Cell Ultra-structure in Baltic Amber ~45 Million Years Old: Transmission electron micrographs of ultrathin cross-sections of the amber cypress tissue. (a) Section of a parenchyma cell with a chloroplast, the double membrane envelope (env), thylakoid membranes (th) and large plastoglobuli (pg), membranes of the endoplasmic reticulum (er), the golgi aparatus (g), the plasmalemma (pl) and part of a mitochondrion (m). (b) Crosssection of a mitochondrion with the outer envelope (env) and cristae (cr). (c) Cross-section of a double-bordered pit from a tracheid-like cell with fine structures of the primary and secondary cell walls. Size bars: (a) 500 nm; (b) 200 nm; (c) 1 mm.Cypress [Proc. R. Soc. B272, 121–126 (2005)]

And if such an approach is ever developed, I’ll give it every consideration, with no ego or emotional attachment to cryopreservation.

At right, nanoscale “rod-logic” mechanical computer, as envisioned by Drexler.

At right, nanoscale “rod-logic” mechanical computer, as envisioned by Drexler.

Unfortunately, that’s not what happened vis a vis nanotechnology, and a clique of people emerged who were heavily emotionally invested in a 19th century mechanical approach to achieving a “technological singularity.” I know I never thought that rod logic computers would be the technology used to run teensy tiny mechanical robots that would repair cryopatients. Truth to tell, I have only vague ideas how repair will be carried out on severely injured patients, and the most credible of those involve information extraction and “off-board” virtual repair. And while I agree that the pace of technological advance is accelerating, I don’t believe in some utopian singularity, because I also know that these advances are not one-sided; they carry enormous costs and liabilities, which will to some degree offset their advantages.

To sum up, it isn’t the ideas of accelerating technological advance, nanotechnology, or any combination of these ideas per se that have been so pernicious; rather, it is the adoption of a utopian (all positive) framework which is socially enforced as the mandatory context in which these ideas must be viewed, that has been so destructive. That is certainly not Eric Drexler’s fault, and I would go so far as to argue that it was at least as much the responsibility of the cryonics organizations that systematically purged people who didn’t adhere to this party line for (among many reasons) the simple fact that failure to project this idealized and easily grasped view of the future was not good for sales. These ideas, presented in an inevitably utopian framework, do in fact get customers. And customers were exactly what every then (and now) extant cryonics organization wanted, and still want: not members, not people to join in the task at hand, but customers. Customers pay their money, get their goods and services, and that’s it, unless they come back as repeat business.

I think we can forget the “repeat business” element in cryonics, for the moment. So, what we now have are people who are increasingly showing up, no longer even alive, paying their ~ $45K, plopping into liquid nitrogen, and sitting there with the expectation that we are going to revive them. And, after all, why shouldn’t they do this, since it is what they are being sold.

For all practical purposes, there is no easily imaginable amount of money that would really cover the costs of a single person’s truly reliable cryopreservation and revival. From the start, cryonics societies were conceived of as mutual aid organizations. This was because of the open-ended and uncertain nature of the idea. Traveling to the future is most akin to signing on to a wagon train to the western United States in the 19th century. You paid your money, and then you worked your ass off. If you were lucky, you made it to California, to Salt Lake, or wherever else you were pioneering to, alive and in one piece. In no way did “going west” imply buying a ticket on the Super Chief, or even on the stage coach. That is where cryonics is right now; it is a pioneering undertaking, but more importantly it is an experimental procedure, and almost everyone seems to have lost track of that reality.

As to FM-2030 (left), I have a lot of sympathy with John La Valley’s article,[7] and I took one hell of a lot of heat for running it, as I was the editor of Cryonics magazine at the time. However, in so many ways FM was a special case, and I believe he deserves considerable forbearance from us – if for no other reason that he was, indeed, one of us.

As to FM-2030 (left), I have a lot of sympathy with John La Valley’s article,[7] and I took one hell of a lot of heat for running it, as I was the editor of Cryonics magazine at the time. However, in so many ways FM was a special case, and I believe he deserves considerable forbearance from us – if for no other reason that he was, indeed, one of us.

I met FM for the first time in the early to mid-1980s, when he invited me to his apartment in Los Angeles to talk about how he could practically and immediately help cryonics. He started by signing up, and followed through by taking me to countless social functions to meet a lot of very influential (and very interesting people). I gained a lot of insight from those efforts. He also relentlessly exhorted people to sign up, and to do it now, and he recruited at least 5 people to Alcor in the 1980s-90s, that I am personally aware of. He was also a good and decent man; someone who people in general liked – and there were not then, or now, many people promoting cryonics who fit that description. He was a wildly overoptimistic man, but more importantly, he put his money and his actions where his mouth was, and that cannot be said for most of the others in the TH community.

Ray Kurzweil (right) is frustrating in many ways, but again, this is a man who has, on balance, made really important contributions to the broad set of issues that confront us. His discrete analysis of the historical trend of diverse technologies would make him invaluable as a stand-alone contribution.[8] That we don’t agree with his conclusions is a different matter, and shouldn’t be conflated with the overall intellectual worth of his contributions. Also, and this is very important, he has not in any way directly involved himself in cryonics, nor has he been critical of it, let alone someone who has ever even remotely attacked it. If he, or FM, had sought out leadership positions in cryonics, and then imposed their world view, my attitude would be very different. As it is, we as cryonicists invited both FM and Kurzweil to speak at our functions and to write for and about us. We were only too happy to accept their help (and indeed to solicit it) when we thought it to our advantage. As a consequence, I’m unwilling to attack these men, or to devalue their very real contributions. Sure, we can and should be critical of ideas and approaches that we believe (or know) are damaging to cryonics. But it is very important to separate the men from their ideas, and our friends from our enemies. We have far too few the former, and far too many of the latter.

Ray Kurzweil (right) is frustrating in many ways, but again, this is a man who has, on balance, made really important contributions to the broad set of issues that confront us. His discrete analysis of the historical trend of diverse technologies would make him invaluable as a stand-alone contribution.[8] That we don’t agree with his conclusions is a different matter, and shouldn’t be conflated with the overall intellectual worth of his contributions. Also, and this is very important, he has not in any way directly involved himself in cryonics, nor has he been critical of it, let alone someone who has ever even remotely attacked it. If he, or FM, had sought out leadership positions in cryonics, and then imposed their world view, my attitude would be very different. As it is, we as cryonicists invited both FM and Kurzweil to speak at our functions and to write for and about us. We were only too happy to accept their help (and indeed to solicit it) when we thought it to our advantage. As a consequence, I’m unwilling to attack these men, or to devalue their very real contributions. Sure, we can and should be critical of ideas and approaches that we believe (or know) are damaging to cryonics. But it is very important to separate the men from their ideas, and our friends from our enemies. We have far too few the former, and far too many of the latter.

References

1. Plus M: Editor’s Blog March 8, 2011: Is Transhuman Militance a Threat to H+? : http://hplusmagazine.com/2011/03/08/is-transhuman-militance-a-threat-to-h/. In: Humanity +.

2. deWolf A: Cryonics and transhumanism: http://www.depressedmetabolism.com/2009/02/11/cryonics-and-transhumanism/. Depressed Metabolism February 11th, 2009

3. Drexler K: Engines of Creation: http://e-drexler.com/p/06/00/EOC_Cover.html. New York: Bantam Doubleday Dell; 1986.

4. Drexler K: Nanosystems: Molecular Machinery, Manufacturing, and Computation: http://e-drexler.com/d/06/00/Nanosystems/toc.html. New York: John Wiley & Sons 1992.

5. Donaldson T: 24th Century Medicine: http://www.alcor.org/Library/html/24thcenturymedicine.html. Analog 1988, 108(9).

6. Darwin M: The anabolocyte: a biological approach to repairing cryoinjury: http://www.nanomedicine.com/NMI/1.3.2.1.htm. Life Extension Magazine: A Journal of the Life Extension Sciences 1977, 1(July/August ).

7. La Valley J: Are You A Transhuman? A short, irate book review: http://www.alcor.org/cryonics/cryonics9008.txt. Cryonics 1990 11 (121):41-43.

8. Kurzweil R: The law of accelerating returns: http://www.kurzweilai.net/the-law-of-accelerating-returns. Kurzweil Accelerating Intelligence March 7, 2001.

1984 Alcor Brochure

I’ve done my share of mocking FM-2030, but as you’ve said, he had his priorities straighter than you see in most transhumanists and even in many cryonicists. His passion comes out especially strong in the posthumous ebook version of “Countdown to Immortality.”

Ironically the younger transhumanists talk a good game about “living forever” and how much they expect to learn from the extra time, yet they tend to dismiss reality checks from older men like me who started to think about, and live with the consequences of, these ideas before many of them were even born. Apparently “forever” from their perspective doesn’t include living through middle age, as currently defined, and learning a few things along the way that they might want to know about. One of these junior transhumanists has made a “career” with a mime act, as John LaValley might call it, about building “friendly AI.” I suspect he’s really constructed a trap for himself; when the gimmick stops working and the money stops coming in to support his lifestyle, he turns from a visionary supergenius and geek celebrity into a middle-aged high school dropout with no history of holding real jobs, and no marketable skills. I suspect a number of transhumanists share this individual’s lack of substance, seriousness and staying power.

As a good friend of mine recently said, “When we are young, we imagine the future as something wonderful, stacked on top of everything that already was, not annihilating it and taking its place.” He is right.

Many years ago, when I was a teenager, Curtis Henderson was driving us out of Sayville to go the Cryo-Span facility, and I said something that irritated him – really set him off on a tear. Beverly (Gillian Cumings) had just died, and it had become clear that she was not going to get frozen, and I was moaning about it, crying about it in fact, and this is what he said to me: “You wanna live forever kid? You really wanna live forever?! Well, then you better be ready to go through a lot more of this – ’cause this ain’t nothin. Ever been burned all over, or had your hand squashed in a machine? Well get prepared for it, because you’re gonna experience that, and a lot more that’s worse than either you or me can imagine. Ever lost your girlfriend or you wife, or your mother or your father, or your best friend? Well, you’re gonna loose ‘em, and if you live long enough, really, really long enough, you’re gonna lose everybody; and then you’ll lose ‘em over and over again. Even if they don’t die, you’ll lose ‘em, so be prepared. You see all this here; them boats, this street, that ocean, that sun in that sky? You’re gonna lose ‘em all! The more you go on, the more you’ll leave behind, so I’m telling you here and now, you’d best be damn certain about this living forever thing, because it’s gonna be every bit as much Hell as it Heaven.”

He was right, too. — Mike Darwin

Just to lighten things up a little, this clip from “Harvey Birdman, Attorney at Law,” featuring the Jetson family, comes close to the truth about many Americans’ deteriorated physical condition in the real 21st Century.

http://video.adultswim.com/harvey-birdman-attorney-at-law/do-we-have-to-walk.html

Speaking of taking a long view, this evening Dave Pizer and I discussed bin Laden’s assassination. I brought up the possibility that if we survive long enough, the historians of a future century might consider the current conflict between Islam and the West as an extension of the Crusades. On a freethought forum I’ve posted that we could also eventually see the “Jesus who?” era. The prospect of a really long life shows the expendability of lot of what we take for granted now.

Henderson definitely got that right. One thing to keep in mind if this life extension/cryonic business does work out and we all make it, everything that is familiar to us is going to be gone and the future society and people will be totally different.

I got a small taste of this experience when I left the “sun belt”, due to a bad recession, and ended up in Japan in 1991. It was an unlikely series of events, none of them that I expected, that lead to me ending up in Japan (until it actually happened, I never even DREAMED of actually living in a place like Japan). Once there, and knowing that I was going to remain there for a multi-year period, I found that everything that was familiar to me was gone, and I mean GONE. It took me about a year to get used to this and to psychologically create a new “life” for myself.

If you’re into life extension and are successful with it, you get to do this on a bigger scale at some point in the future.

> The more you go on, the more you’ll leave behind, so I’m

> telling you here and now, you’d best be damn certain about

> this living forever thing, because it’s gonna be every bit

> as much Hell as it Heaven.”

>

> He was right, too.

As I’m sure you’re all too well aware, the current crop of >Hists

(who have basically subsumed the cryonics crowd by now) **do not**

want to hear this kind of thing.

Say it on the Extropians’ (or WTA Talk, or wherever) and you’ll

be shouted down, and eventually just moderated off.

Read an SF author who makes that the theme of a story, and you

can be sure that SF author will **not** be among the >Hist

canon.

Years ago, I introduced a friend of mine (a very smart guy, who

will himself turn 75 this year) to the Extropians’ list, and

after a few weeks reading it (and even contributing one or two

posts), he pulled the plug in disgust, dismissing it as

“buncha kids wanna live forever”. He was right, of course,

though I’d say “buncha kids wanna live forever **without thinking

too clearly about what _exactly_ that might entail**.

What **exactly**, for example, is going on when a prominent

“anti-death crusader” among the >Hists (I’m not going to name

the name here) uses the death of a family member — a very young family

member who just **committed suicide**, for crying out loud –

as an excuse to redouble his (somewhat self-aggrandizing, but what

else is new?) rhetoric? But — what about the **suicide**? what

made your family member want to do that? Did you even see it coming?

What does that imply **generally**? — Yes, immortality is there

for the grabbing for those who remain desperate enough to live;

and as for the rest — to hell with them? Is that how you feel

now about your family member?

But no. Off limits. Not even acknowledged publicly (the “suicide”

verdict came from independent news sources).

http://www.amazon.com/The-Visioneers-Scientists-Nanotechnologies-Limitless/dp/0691139830/

I’ve skimmed through the parts of The Visioneers that Amazon lets me read online, and it strikes me that I could have written this book myself. I’ve witnessed or at least followed the events it describes, starting with my membership in the L-5 Society in the late 1970′s and my involvement in the cryonics subculture starting in the 1990′s. I’ve even met or I know some of the people mentioned in this book.

It seems to cover a lot of the same ground as Ed Regis’s Great Mambo Chicken published in 1990, but with the benefit of an additional 20 years added to the baseline to show that many of these futurist ideas from the 1970′s and 1980′s – centered around Gerard K. O’Neill and Eric Drexler – which generated such enthusiasm at the time apparently don’t work.

Unfortunately cryonics has gotten mixed up in this bad futurology, when cryonics has an independent set of problems and ways of trying to solve them. The association has apparently damaged cryonics’ credibility as well.

So I imagine that someone will write a similar book about 20 years from now about (the probably dead or cryosuspended) Ray Kurzweil and Eliezer Yudkowsky to show how they misdirected a generation of geeks with their futurist fantasies the way O’Neill and Drexler did in the 1970′s and 1980′s.

Mark, you’re either misunderstanding or misrepresenting the entire concept of Friendly AI when you suggest this is a form of futurological optimism.

http://singinst.org/singularityfaq#FriendlyAI

Perhaps you are willing to defend the claim that AI is no threat to us whatsoever?

I am skeptical of near-term (within 40 years) AI for mostly technical reasons. Brains really are not like computers at all. Some of this is discussed earlier in this site on the topic of whether human identity survives cryo-preservation. It is important to realize that brains work on several levels (LTP, synaptic connections, etc.) in ways that are not duplicated in computers. Also realize that there are over 160 types of synapses and that the dendritic synapse connections are a dynamic process where they are being deleted and recreated all the time. The semiconductor people are not even thinking of creating this kind of dynamism in semiconductor devices. Also, human memory and processing is chemical in nature, not digital electronics.

The hardware complexity in semiconductor devices will probably exceed that of human brains in the next 10 years or so. But it will not have any functional resemblance to the human brain at all. As for making the software to somehow emulate the human brain, all I can say about that is that Moore’s Law has never applied to software design. It only applies to the semiconductor hardware only. I have a friend of a friend who is one of the developers of Mathematica programming tool. He took Drexler’s class when it was offered at Stanford. He told me he thought the hardware would get there in about 50 years, but the software would take about a 1000 years.

No, I’m not concerned about AI, friendly or unfriendly, at all.

As for nanotech itself, developments continue at a steady rate and its all “wet”. Here’s an example of a nanotechnology development that was announced just yesterday:

http://nextbigfuture.com/2011/04/nanofibrous-hollow-microspheres-self.html

Here’s some Graphene paper that is 10 times stronger than steel:

http://nextbigfuture.com/2011/04/graphene-paper-that-is-ten-times.html#more

Whether humanlike brains can be simulated or not is utterly irrelevant to the intelligence explosion question.

People who think AI is simply humanlike computers (and therefore is impossible if biology is sufficiently different) miss the point. AI is computers doing something like what humans do. It does not matter if they employ similar mechanisms. Computers do NOT employ similar mechanisms for addition of numbers, speech and image recognition, or a host of other tasks. That does not diminish their impact or ability to replace humans at those particular tasks in many circumstances. Usually we are thankful for this to be the case because they tend to replace us at very boring tasks.

If AI becomes more general and goal-based in scope, we have reason to be concerned because it would (by default) be most similar to a very ruthless and intelligent human with a fanatical devotion to arbitrary goals, which would not necessarily be inclusuve of the survival of humanity. The mechanism by which it becomes capable of pursuing general goals does not matter to the argument. Humans can pursue general goals: Unless you can defend the assertion that this is based completely on our unique biological make-up (unlike, you know, math, speech, navigating mazes, and so forth), the fact that computers use different mechanisms is irrelevant.

No its not. Nor do I think there will be any “intelligence explosion”, which sounds like another term for “singularity” anyways.

Human sentience and autonomy results from the characteristics of brains that I mentioned previously. I don’t think it possible to realize sentient AI in semiconductor devices without these characteristics. Indeed, I expect progress in semiconductor scaling to stop once the molecular level is reach, which will be 2030-2040.

Also, any such intelligence explosion is unlikely to make much of a difference even if it were possible. The limiting factor in the rate of technological innovation is not the aggregate intelligence of all of scientists in any particular field. Its factors like capital costs involved in R&D as well as the physical time to perform such experiments and to integrate the results of those experiments into follow-on experiments or useful technology. Having a bunch of IQ 300 AI’s is not going to accelerate this process one bit.

> No its not. Nor do I think there will be any “intelligence explosion”, which sounds like another term for “singularity” anyways.

Please read the FAQ that I linked to previously, starting at section 1.1. Yes the term refers to one kind of singularity.

> Human sentience and autonomy results from the characteristics of brains that I mentioned previously.

As do their math, language, image processing, and other capabilities which have been implemented on computers.

> I don’t think it possible to realize sentient AI in semiconductor devices without these characteristics.

“Sentient” AI is not required for this argument to work, only generally intelligent AI. Not all minds capable of self-improvement and technological advancement are sentient.

Nevertheless, you have little data on which to base the assertion that computerized sentience cannot be achieved. We can achieve computerized image manipulation, speech recognition, and mathematical reasoning, all by following radically different paths from those used by human brains. Why not sentience as well?

For myself, I would like to see two things in this discussion. First, a definition of what is meant by “sentience,” and second, a reasonably rigorous theory of consciousness. These are important from my perspective, because they speak to the issue of the likelihood of the creation of a conscious intelligence.

Flexible purposefulness, as is seen in higher animals, and especially in man, seems to require that a number of elements be present and connected in specific configuration. The first of these is likely to be recursiveness; the ability to model the environment within the environment; the second might well be parallel processing of different aspects of the modeled reality; animal and human minds appear to be a multiplicity of modular processing units which don’t just merely crunch numbers, but which actually monitor and model the external environment and often do so competetively (ah capitalism!). There also needs to be some kind of quick sorting and motivating mechanism that operates independent of rational processing. The best candidate for that at the moment seems to be feeling and emotions. Facts and data, per se, are all of equal value, unless they are put into a context. All of advertising, and much of parenting is “getting the attention, interest, and finally the commitment to action on the part of the target intelligence.” In fact, in looking over the discussion on New Cryonet between Luke and Mark, you can see that this pertains to adults, as well. If you really want the full command of someone’s attention, you can get it most quickly and reliably by pissing them off. This works vastly more reliably than rewarding them, because rewards are relative: its hard to know what constitutes are reward for a given person, whereas, pissing then off is guaranteed to work, because it is perceived as threatening, and therefore URGENTLY linked to survival.

One interesting thing I’ve observed about intelligences is that the hungrier or needier they become, the more susceptible to influence by reward they become. By definition, almost everyone with a computer and Internet access is “making it,” in terms of survival, so adding a little to their bounty isn’t going to be nearly as effective as threatening to take it away (contrast the reaction of a child being given a second lollipop, with that of removing the first one – or even threatening to).

I don’t think that consciousness necessarily requires the configuration of a biological brain. That’s not where I see the bottleneck at. I think the real issue is understanding how the enormously complex mechanics of consciousness, even at a rudimentary level, are configured and operate. And I certainly don’t see that happening either accidentally or easily. Biological evolution produced consciousness absent planning or design, but it took a long, long time. Thus, I personally wouldn’t give a pence or a pound to people trying to create “friendly AI” until they have first demonstrated a rigorous theory of consciousness, and preferably created a rudimentary one. I’d just as soon give a Medium money to conjure me up Casper, the Friendly Ghost.

And yes, I do think that artificial consciousnesses and artificial intelligences will be possible, and that they might conceivably come into existence sometime in the next 20 to 40 years. But I don’t think it will happen accidentally, or all at once. Having said that, given unfettered and widely distributed development in the same way computing and consumer electronics have enjoyed, the time from first proof of principle to a serious problem or threat may be perilously short. I was just given an iPad. It doesn’t do a lot, but it is absolutely wonderful at what it does do. it is elegant and it cries out to be used. The distance between the first vacuum tube and the iPad is probably as great as the distance between rudimentary, single element models of consciousness and the first machine with high order intelligence. I suspect it will be a long hard haul – and that may be to the good.

What is a very real threat and what does exist now, or will shortly, are sub intelligent machines with either enabled or unforeseen destructive capability. Anyone who has lived in Southern California (or Mexico or much of South America) will at once appreciate the destructive capacity of ants, or of a range of surprisingly dull wild animals if they get indoors. Really clever and persistent malware already qualifies as this kind of threat and its direct costs to businesses was $55 billion a few years ago! Think of how much all that wasted capital could have accelerated the pace of advance in medicine if it were focused on basic biomedical research?

Finally, everybody here is a cryonicist. There are no AIs, friendly or otherwise, and I submit that they are the among the least of our very real obstacles to survival, and fairly far down the list of the theoretical ones, as well. Think of that 55 billon dollars – can’t you guys think of ANYTHING to do with that time effort to further cryonics, or even your own personal survival? Sigh. — Mike Darwin

I agree that AI of a general nature are a long ways ahead of us, at least as we are used to reckoning progress. However I feel quite confident that it is rational to have in place at least a minimal hedge against such an event, with competent folks ready to leap on any new AI advances and ready to competently point out how they could go wrong and what needs done to fix it. Humans, thanks to our long evolution under competitive circumstances, have various inhibitions and competing internal voices, which is why we don’t usually do crazy things like fly planes into buildings — there’s no guarantee that the first AI will, unless it is designed competently.

Furthermore if it is fully general, there’s nothing to inherently limit the blast radius should something go wrong. An atomic war, for example, would at worst wipe out humankind and other terrestrial life. A worst case AI scenario could also massacre any sentient life it happens to come across, like an interstellar plague limited only by the speed of light.

In my opinion Mark should reserve his condescension (which he does a good job with, and I don’t fault him for that) for a target that actually deserves it. I have never defended FAI research as the most likely and shortest path to life extension (though if certain assumptions hold it could in fact be), but I would say that given the sort of danger it mitigates for mankind as well as other sentient life forms, this is a job we should all be much happier having someone paying attention to.

It is also a crucial component of establishing the credibility of cryonics to let people know that someone is looking out for the future even in cases of extreme, hard to imagine, low-probability contingencies — and that there are in fact ways to competently do so. The art of rationally sorting out good from bad logic in forming these kinds of scenarios is taken very seriously by the Less Wrong cluster of communities, as it should be.

On another note, one thing I’ve seen come across clearer with Yudkowsky than just about any other transhumanist writer is the absolute moral priority of human life over any other pursuit. People shouldn’t have to die, period. Humanism isn’t so complicated that we have to put the sick and elderly on an altar to the blind and fundamentally sociopathic gods of nature. We aren’t tiny bands of hunter gatherers who are powerless before the whims of fate any more.

One, I don’t see why a 31-year-old high school dropout has any more insight into this alleged existential risk than anyone else.

Two, even if he does know something about it that the rest of us haven’t figured out yet, I don’t see what he can do about it, or why he needs money for his own “institute” to try to save us all from the threat. I mean, seriously, his fans make him sound like a benign version of Lex Luthor.

A few days after this post I more thoughts on this remark of yours: “I suspect he’s really constructed a trap for himself; when the gimmick stops working and the money stops coming in to support his lifestyle, he turns from a visionary supergenius and geek celebrity into a middle-aged high school dropout with no history of holding real jobs, and no marketable skills. I suspect a number of transhumanists share this individual’s lack of substance, seriousness and staying power.”

I suspect that you and I are living in a paleo-past. This is probably best demonstrated by what really happens to these folks when the “gig is up” and the emperor is discovered to be sans clothes. Principally that they find employment with the greater fool at even higher pay – at a place where there is more nonsens and even more detachment from reality than was the case at their previous employer. Thus, such souls find ready employment running cryonics organizations, working in key positions in cash sucking black hole anti-aging operations and Nanotechnology threat mitigation think tanks, and then switch back and forth betwitxt and between… ;-0. — Mike Darwin

That raises the question of what I call cryonics’ apostolic succession: Developing a way to find competent and dependable younger cryonicists for an unknown number of generations to look out for all our interests when we go into the dewars.

I’d like to read your current thoughts on that.

BTW, I’d like to announce my availability for the apostolic succession on behalf of current cryonauts and the ones likely to join them ahead of me, though I consider myself best suited for a “wheel horse” position.

I’ve noticed from reading the short bios of the speakers at transhumanist conferences how many of them seem to lack anything I would call useful, or even well defined, employment. For some examples:

http://humanityplus.org/conferences/parsons/speaker-bios/

What do “research fellows,” “design theorists,” and various artists and bohemians do that generates an income? How do they come up with the money for daily living expenses, not to mention for flying around the world to attend these transhumanist mime shows?

I find it all very mysterious.

What do “research fellows,” “design theorists,” and various artists and bohemians do that generates an income?

Job descriptions in much of the entire American business culture stopped making sense to me starting in the mid 90′s. I still can’t figure out what most people with fancy titles in the business world actually do on a day to day basis.

This is one (of many) reasons why I don’t advocate government contributions to such efforts. It sounds like they become *less* likely to work when funding is artificially plentiful, as they attract all the wrong people.

Principally that they find employment with the greater fool at even higher pay – at a place where there is more nonsens and even more detachment from reality than was the case at their previous employer.

This silliness lasts only as long as the bubble. But the bubble ended in Fall of ’08 and there are no other bubble, despite the best efforts of Bernanke and Obama to create another. At some point, reality does return.

admin wrote:

“For myself, I would like to see two things in this discussion. First, a definition of what is meant by “sentience,” and second, a reasonably rigorous theory of consciousness. ”

Perhaps we need to bring qualia into this discussion…..

I would define sentience as independent volition. I think this actually does require some of the characteristics of brains that the Admin and others have mentioned in previous postings. I do not expect these to be replicated in semiconductor devices.

BTW, it really does look like semiconductor scaling by conventional fabrication techniques (deposition, patterning, etching) will reach its limits at around the 10nm design rule, which will be reached in about 10 years according to the SEMI roadmap.

http://nextbigfuture.com/2011/04/intrinsic-top-down-unmanufacturability.html

This means the end of Moore’s Law unless bottom-up manufacturing is developed. Of course the bottom-up processes will be developed and Moore’s Law pushed out another 10-20 years when the molecular level is reached. This will be the final limit. It is worth noting that “bottom-up” manufacturing will likely be based on solution-phase self-assembly chemistry, which is not too dissimilar to how brains grow and develop. If we do get sentient AI, it will be based on manufacturing processes and structures that are essentially “biological’ in nature. In other words, our computers become like us, not the other way around.

There’s also the software issue. The problem with software is that Moore’s Law improvement does not apply to it. There has been no improvement in software, in terms of increased efficient use of computing resources as well as conceptual architecture, in the past 30 years. A breakthrough in software is necessary for developing AI. I see no prospect for this in the foreseeable future.

I agree that “sub intelligent machines” are a problem. Internet viruses are a form of this. Autonomous and semi-autonomous robots will become increasingly common in the near future. Unlike sentient AI, I think the robotics industry is about to take off in a big way and I think it will become a major industry, in dollar value. If, say, in 2020 or 2030, people routinely pay $10-15K for various house work and gardening robotics, this is 1/4 to 1/2 what they pay for cars, this will make the consumer robotics industry 1/4 to 1/2 the size of the auto industry, which is the worlds largest industry in dollar value. Then there is all of the commercial and industrial robot applications as well. Also, medical (robodocs and robosurgeons) and elder care robots (yes, they have these in Japan). Given the aging populations of much of the world and the, so far, lack of effective anti-aging therapies in the marketplace, this is definitely a growth market for robots as well.

I’m talking about a serious growth industry here.

Developments towards medical nanotechnology:

http://nextbigfuture.com/2011/04/researchers-construct-rna-nanoparticles.html

Can you rate your degree of surprise on a scale of 1 to 10 as to the case if independent volition were implemented to a functional degree on semiconductor devices? Because for me it’s only about a 3.

10

Please tell me why, so that I can update my beliefs if I am wrong.

What about “independent volition” makes it innately biological, unlike speech, math, image processing, object manipulation, and so forth? How do you define the term to begin with?

Have you believed differently in the past, and if so what made you change your mind?

“There’s more than one way to do it” is actually a programming motto. Not every way is the fastest or least buggy, but there are always multiple ways to do something sufficiently complex. Thus at a guess, without pretending to know everything about the topic, it seems to me that whatever it is that “independent volition” maps to, there’s a pretty good chance that there is a way to do it that does not involve 160 different types of synapses and so forth.

But regardless, whether you want to slap the label “conscious” or “independent volition” on something is irrelevant to the question of whether it can accelerate technological change or pursue a goal in generalized ways that could accidentally endanger humanity. If these specific traits can be done in some artificial form (whether silicon or not — I don’t get why you are hung up on that particular trait), we want to be researching ways that it can go wrong and ways to prevent it.

Here’s a classic example of the hype surrounding AI:

http://nextbigfuture.com/2011/04/researchers-create-functioning-synapse.html

Here’s the reality behind that hype:

http://alfin2100.blogspot.com/2011/04/double-plus-overhype-ado-about.html

http://www.science20.com/eye_brainstorm/overhype_synapse_circuit_using_carbon_nanotubes-78308

The AI field is full of this kind of hype, as are many other areas of science. The hype seems especially pronounced in fields that are trendy or politically correct.

There’s a huge gap between what we’ve accomplished and what will ever be accomplished. While it certainly seems likely to me that AI is nowhere near the point people would like to believe it is, that says nothing about what is possible in the long run. You have commented that you are not at all worried about AI, Friendly or otherwise — where does this confidence come from?

I don’t expect sentient AI for a long time, certainly not in the next 40 years.

I view politics, bureaucracy, and hostile ideologues as the real threats to our objectives in the foreseeable future.

I don’t expect them in that range either. But my surprise at seeing them wouldn’t be all that much higher than say, global warming.

Politics and hostile ideologues are a problem we can (and should) confront in the here and now.

I see singularitarianism as something of a test-case for immortalist rhetoric. It is more extreme than cryonics, so the knee-jerk reactionaries are more likely to criticize singularity beliefs and less likely to ridicule cryonics directly. We should stress not the ridiculousness of singularity scenarios but the relative conservatism of cryonics.

Maybe what is needed here are specific indicators that mature AI is foreseeable and/or imminent. The idea of computer malware was foreseeable long before the development of the www as it exists today (and has existed for 20 years, now). An important point about viruses and other malware is that they didn’t happen by accident. They required (and still do) purposeful design of a vary high order. They are also a direct product of a vast body of technological developments in computing, microelectronics, and yes, software.

If we look back over the history of computing, starting say, with ENIAC, you can retrospectively, and thus in theory prospectively, enumerate a long list of prerequisite technological developments that were necessary before malware could become a reality, let alone a threat.

So, in this spirit, I offer the following:

1) The very nature of intelligence, probably a core property, is to be able to rank and prioritize information from the environment and weight it as to it’s likelihood of causing harm or providing benefit. Being unable to do those would mean that we would spend all of our time chasing every possible benefit and preparing for every possible threat. Thus, we should behave intelligently with respect to risks like AI, which may be extreme, but are also distant.

2) There will be sentinel events that indicate that AI is approaching as a possibility that merits the expenditure of resources to investigate and defend against credible threats.

3) Here are some examples of what I think are likely “signs” that will necessarily precede any possible “AI Apocalypse”:

A) Widespread consumer use of fully autonomous automobiles which drive themselves to their passenger-specified destination.

B) Significant (~25%) displacement of cats and dogs with cybernetic alternatives as pets. Biological pets have many disadvantages, not the least of which are that they grow old and die, get sick, cannot be turned off while you go on vacation. Any mechanism that effectively simulate the psychologically satisfying aspect of companion animals will start to displace them.

C) Significant (~25%) fraction of the population spends at least 50% of their highest value (most intimate) social interaction in time with a program entity designed to be a friend: receive confidences, provide counseling, assist in decision making, provide constructive criticisms, provide sympathy and encouragement. The kind of program might perhaps be best be understood by imagining a very sophisticated counseling program,, merged with GPS, Google search, translation, and technical advice/decision making programs. The first iteration of these kinds of programs will probably come in the form of highly interactive, voice interrogate-able expert systems software for things like assisted medical decision making and complex customer service interactions. However, what most people want most in life is not to be lonely; to have someone to share their lives with. This means that any synthetic entity must necessarily be able to model their reality and to determine and then “share” their core values. Friends can be very different from us, but they must at a minimum share certain core values and goals.

They may have different “dreams” and different specific objectives in life, but they must have some that map onto our own. The first marginally to moderately effective synthetic friends will be enormous commercial successes and will be impossible to miss as a technological development. They may no be able to help you move house, or share your sexual frustrations, but if they share your passions and longings and can respond in kind, even in a general way, then they will be highly effective. It will also help a lot in that they can be with you constantly and answer almost any question you have. If you want to know what time it is Islamabad or how the Inverse Square law works, you can simply ask. And not only will they know the answer, they will know the best way of communicating it to you. For some, the best answer about the Inverse Square Law will be to show the equation, for others it will be a painstaking tutorial, and for others still, a wholly graphic exposition. In this sense, this kind of AI will be the BEST friend you have ever had.

Fred Pohl came very close to this concept in THE AGE OF THE PUSSYFOOT with his “Joymaker.” While the Joymaker was all these things, it wasn’t friendly – it didn’t model Man Forrester’s mind and generate empathy, and empathy derived counseling. And it didn’t know how to get Man Forrester’s attention and make him care about the advice it had to give him.

D) Blog Spam which is indistinguishable from real commentary: even when it proceeds at a very high level of commentary and interrogation.

Food for thought, in any event. Maybe Mark, Abelard or Luke can come up with a more definite set of signs: SEVEN SIGNS OF THE IMPENDING AI APOCALYPSE would be a catchy title. This is an example of the kind of thing that is really useful in protecting against and preparing for mature AI.

I would also note that there is a consistent failure in predicting the future of technologies and of their downsides focusing on their implementations writ large. No SF writer envisioned tiny personal computers; and nuclear fatalists projected nation-state mediated annihilation via MAD. Evil computers were seen to be big machines made by big entities, as in the Forbin Project.

Of course, there are now big machines/networks and our very lives now depend upon them (power grid, smart phone network, air traffic control…) however, it is the little guys who represent the threat by being to crash these systems and keep them down for weeks or months.

If we look at the threat from AI, it seems to me it is most likely to come from intense commercial pressure and competition to produce the kinds of things we want most; namely friends who understand us and care about us. What wouldn’t we give to have someone who would not only listen to us and empathize with us, but who would also focus and bring to bear all the knowledge and expertise available on the Internet to help us reach our personal goals? That’s where the money is and that’s where the danger is. A life coach with the intelligence of god and the relentlessness of the devil.

Remember the Krell and the monsters from the Id.

Mike Darwin

These are all good indicators.

I would one more. Complete automation of the manufacturing supply chain.

What wouldn’t we give to have someone who would not only listen to us and empathize with us, but who would also focus and bring to bear all the knowledge and expertise available on the Internet to help us reach our personal goals?

This would not work for me as I would not consider such a “person” to be real. I prefer to be around real people, no matter how much they irritate and piss me off. Also, I think empathy works both ways. By wanting someone to empathize with you, you are wanting something out of that person. You have to offer something of value in return. This is the nature of (proper) relationships. This is part of what make real people real.

I find the idea of AI “councilors” and “friends” to be rather creepy. I guess I’m a bio-fundamentalist in this sense. People are people and machines are machines and never twain shall meet.

I view machines as tools. Tools increase your ability to make things and do stuff. One uses computers to design things and robotics manufacturing to make them, whether it be a house, yacht, or a space colony. I want to make things. Much of the problem I have with contemporary (post 1995) America is that they’re not much into making things and, worse, they want to inhibit those that do.

This is a problem. Problems exist to be solved.

> People are people and machines are machines and never twain shall meet.

I don’t know, I feel kind of like a biological machine with a few billion years of evolution behind it to me :)

> A) Widespread consumer use of fully autonomous automobiles which

> drive themselves to their passenger-specified destination.

Yes, Mark Humphyrs (among many others) spoke about this

back in ’97:

http://computing.dcu.ie/~humphrys/newsci.html

————-

“[I]f some of the intelligence of the horse can be put back

into the automobile, thousands of lives could be saved,

as cars become nervous of their drunk owners, and refuse

to get into positions where they would crash at high speed.

We may look back in amazement at the carnage tolerated

in this age, when every western country had road deaths

equivalent to a long, slow-burning war. In the future,

drunks will be able to use cars, which will take them home

like loyal horses. And not just drunks, but children, the

old and infirm, the blind, all will be empowered.”

————-

Google, of course, is actually working on this (receiving the

technological torch from Carnegie Mellon University, I guess), but they

get no credit for it from >Hists at all, as far as I can tell.

Driving a car is much closer to having an infrastructure for

intelligence than parsing human language or doing math, IMO.

“Being precedes describing,” as Gerald Edelman once put it.

There was a work of fiction published — oh, back in ’96 I think:

_Society of the Mind_ by Eric L. Harry (not to be confused with

_The Society of Mind_ by Marvin Minsky ;-> )

http://www.amazon.com/Society-The-Mind-Eric-Harry/dp/0340657243/ .

It’s usually classified as a “thriller” rather than pure SF, but

it has not just self-driving cars, but some interesting (and

sometimes chilling) scenes involving the training of robots to

interact both with inanimate objects and with living things.

Much more insightful than the stuff that was being posted to the

Extropians’ at the time about AI.

Luke writes;

On another note, one thing I’ve seen come across clearer with Yudkowsky than just about any other transhumanist writer is the absolute moral priority of human life over any other pursuit. People shouldn’t have to die, period.

Mike Perry said it earlier. I don’t think Yudkowsky has given him proper credit.

I did not say he was the first to say it, only that it has come across clearer. Of course he’s standing on the shoulders of giants; I never got the impression he was pretending otherwise.

As usual an enjoyable read.

While nanotechnology is certainly in its most early stages I have to be skeptical that it will ultimately prove the solution to reviving patients in suspension. The same temperatures needed to keep tissues from decaying will likely prevent molecular machines from functioning well.

No, I imagine there will need to be the emergence of brand new technologies we can barely imagine to restore resting cryonauts. As such the time frame will be much longer than most might guess and I believe we need to plan for 500 years plus.

As to how we will view ourselves when immortality is possible – this too is difficult to imagine. Psychologically we have all learned, or are struggling to learn, how to cope with the concept of death and possibly nonexistence. It is fair to say that religions are ultimately based on this need and you can’t underestimate how powerfully this colors all aspects of human existence and civilization.

> The same temperatures needed to keep tissues from decaying will likely prevent molecular machines from functioning well.

My intuition after reading up on the topic (Richard Jones’ Soft Machines is a good book that is skeptical of clockwork style nanomachines) is that ultra-low temperatures would actually make it easier to use more sophisticated and precise molecular nanomachinery. It would reduce the incidence of brownian motion and make it possible to expose tissues to a hard vacuum without instant dehydration. Otherwise, for warm/wet temperatures you would need a biological style of design, which apparently limits your options somewhat.

The most conservative low-temp scenario I can imagine would involve the entire brain (and any other tissue to be recovered) being sliced into very thin layers, with special-purpose nanomachines printed on the surfaces to perform the repairs. The tissue would be repaired slowly, perhaps using a very low-energy process to avoid producing heat. Eventually all cracks would be repaired, toxic vitrification agents replaced with less toxic ones, cellular membranes sealed back together along the fault lines, and major synapses reconnected as far as is possible. A temporary scaffold would be put in place throughout the tissue capable of preventing new fractures during rewarming, distributing heat, and removing cryoprotectant.

Only then would the body be returned to its warm, wet natural state. At that point there would be biomimetic nanomachines and genetically engineered microorganisms to support the patient’s recovery and prevent the ischemic cascade from progressing. These could be introduced in cryogenic form prior to rewarming to the precise sites needed, along with tissue-specific stem cells to replace those that were beyond repair.

Paul B writes:

>No, I imagine there will need to be the emergence of brand new technologies we can barely imagine to restore resting cryonauts. As such the time frame will be much longer than most might guess and I believe we need to plan for 500 years plus.

I guess you don’t accept Drexler’s assessment of his Nanotechnology as “the last technological revolution.”

I suspect it has hurt cryonics’ reputation more than we realize by tying it too closely over the past quarter century to a speculative technology which simply hasn’t arrived, and which probably won’t arrive if it gets the physics wrong from the beginning. Contrast Nanotechnology with the rapid progress of technologies which exploit quantum mechanics, like magnetic resonance imaging in medicine.

You can see cryonics’ loss of status in popular futurology when you compare Ed Regis’s book published circa 1990 with Mark Stevenson’s similar book published recently. Regis treats cryonics ironically, but at least he gives cryonics activists the opportunity to have their say. Stevenson, by contrast, dismisses cryonics early on in a single paragraph, and compares it to Alex Chiu’s immortality rings.

I can understand many cryonicists’ reluctance to admit they’ve misinvested their hopes for such a large portion of their lives so far. Something akin to sunk-costs reasoning keeps us from writing off the mistake and looking for better opportunities.

I would prefer for the cryonics movement to distance itself from the propeller heads’ current fads (Ray Kurzweil, for example) and orient the project in reference to a longer time horizon, as Paul B suggests. In recent years the leaders of the cryonics movement have taken too many of what Mike Darwin back in the 1980′s called “soft options.” For an assemblage of individuals who claim we want to live a really long time, and who think about “the future” a lot, we certainly don’t display the best judgment about the practical aspects of survival.

I cannot speak for others, but my belief in hard nanotech (as a significantly probable future development) has nothing to do with sunk costs. I would be happy to accept living in a world where we know it to be impossible or highly unlikely. The same goes for uploading and the singularity. In fact if it could be proven impossible on legitimate factual grounds, I’d be happier knowing that the premise of cryonics itself — that tomorrow’s tech can revive today’s apparent corpses — is false. But why should I pretend to be convinced by shaky arguments?

>In fact if it could be proven impossible on legitimate factual grounds, I’d be happier knowing that the premise of cryonics itself — that tomorrow’s tech can revive today’s apparent corpses — is false.

The bogosity of “nanotechnology,” as Drexler has defined it, doesn’t mean that cryonic revival can’t happen at all. It just means it can’t happen using his misconceptions. Drexler and his fans have done cryonics a disservice by channeling our thinking about it down an unproductive path for the past quarter century. It has also had the effect of rationalizing negligence in suspension procedures because our Friends in the Future with their magic nanomachines can repair the damage caused by suspension teams’ fuck ups.

> It has also had the effect of rationalizing negligence in suspension

> procedures because our Friends in the Future with their magic nanomachines

> can repair the damage caused by suspension teams’ fuck ups. . .

Really — if you take some of the “Singularity” discourse at face value –

and a lot of >Hists do, and are unwilling to acknowledge **any** limits:

“If we can imagine it, it can be done!” — then why even bother with

suspension?

Folks at the end of time will be able to look back with a super-telescope

and interpolate the position of every atom in the universe at any point

in time, and resurrect every virus, every amoeba, every dinosaur,

and every human who ever lived.

Folks will be revived whether they were cryonauts or not! (Sounds

something like Heaven, dunnit?)

Of course this was Frank Tipler’s idea, and in fact there was a bit

of it in Stapledon’s _Last and First Men_ and _Star Maker_ — not

literal resurrection, but the Last Men could telepathically contact

humans from earlier ages in order to “understand” and “appreciate”

them, and thereby enhance their significance in the history of the

universe, or at least enhance the Last Men’s understanding and appreciation

of their significance in the history of the universe, or

something. Something like the Mormons baptizing the dead.

Curtis Henderson is certainly correct in saying that if you really want to live forever, you have to accept that you will loose everyone around you and that you will experience both the good and the bad. With infinite life, attachments become temporary in comparison. I don’t think many people can handle this.

In life, shit happens. The longer you live, more shit happens.

So, does anyone have some ideas about stopping the cryonics movement’s decline?

Perhaps the solution is to make it more fun, upbeat, and entertaining.

Yes, people like us have to get involved and face the “rain of hammers” as a former Alcor CEO put it, turn things around, and make it happen. The problem with me is that I do not have the money, the time, nor the inclination to do so currently.

Abelard, would you care to email me? I’d like to know more about your situation.

markplus@hotmail.com

Mark,

I sent you an email explaining my situation.

All this talk about high school/college drop-outs with little formal education; the likes of Yudkowsky and Anissimov (my generation; my age falls inbetween theirs)… Here I am with an AA and a BA and the minor annoyance of a couple 10s of thousands of student debt, awash in the doldrums of meaningless, menial labor like a good little drone while these guys shape the conversation (ie; dealing with the guilt by association vomited onto me by Amor Mundi’s Dale Carrico).

On the one hand, Mark’s insistent dismissal of Yudkowsky as a “high-school dropout” is myopic. Yudkowsy and Anissimov are very clearly more astute than the vast number of inculcated academics like Carrico.

If anything, the ascension of individuals from my generation who are “taken seriously” (at least by some in the credentialed establishment) despite lacking any credentials of their own is evidence of the abysmal shortcomings of our current incarnations of formal education (what Carl Sagan once described as “reptilian”); suffocation of our society by traditional credentialing…

The rejoinder would seem to be that as more and more individuals realize that the mere *ease of access* to knowledge afforded today gives them the (potential) power to engage *anyone* on *any* subject, the credentials that *actually matter* will be practical accomplishments and experience they’ve earned over the course of their own careers (to the extent they can be called such). I think this applies to some in the “cyonics community” who are, unfortunately, not taken seriously.

I don’t harp on Yudkowsky’s dropout status as much as you think, and not for the reasons you might assume. Many transhumanists like Yudkowsky give themselves contrived livelihood descriptions like “artificial intelligence theorist,” which means what, exactly? How do you become an “artificial intelligence theorist,” and according to what accepted criteria? The Indian mathematician Ramanujan lacked academic credentials, but he sent letters to the English mathematician G.H. Hardy which demonstrated genuine mathematical ability. (Charlatanism doesn’t work in mathematics, for obvious reasons.) Has Eliezer done something similar? And what revolutionary or even just plain-vanilla useful thing has he accomplished as an “artificial intelligence theorist”?

If Yudkowsky had instead gone into, say, game design and had some success at that, I wouldn’t care about his lack of credentials. His computer games would go for sale on the market, people could buy them and try them out, and then they could decide for themselves whether they got their money’s worth.

No, Yudkowsky bothers me because he has become a geek celebrity through the promotion of an illusion of progress, without the substance, and he also shows signs of becoming the next L. Ron Hubbard.

Fortunately transhumanists tend to have short attention spans, if they don’t lose interest in transhumanism altogether as the Great Stagnation wears on and the reality principle (aging and mortality) intrudes, so I suspect if we have another crop of transhumanists in 2022, many of them will ask “Eliezer who?” as they latch onto newer and even more meretricious celebrities.

I agree; I think one of the reasons Yudkowsky and the like are benefiting from the popular sentiment behind the TED tide that rises all faux-intellectualist boats is because they, like me or anyone, know how to regurgitate facts.

Hell, speaking of AI; this phenomenon might reveal something about just how people could react to a Wiki-bot capable of white-noise philosophical musings about things that don’t exist (ie; the “S”ingularity). It has encyclopedic knowledge, so it *must* be a sage!

“No, Yudkowsky bothers me because he has become a geek celebrity through the promotion of an illusion of progress, without the substance, and he also shows signs of becoming the next L. Ron Hubbard.”

Prescient. I can imagine an SIAI awards ceremony where Yudkowsky receives an over-sized medal ala Tom Cruise in front of a gargantuan portrait of LRH/RK himself, Ray Kurzweil, long since vanished from public sight but rumored to have uploaded his mind into a water ionizer.

I saw a preview of the film called “The Master,” apparently about a Hubbard-like cult founder in the 1950′s. In one scene the cult founder calls himself a “nuclear physicist,” and I realized that that probably sounded cool and futuristic in that decade before public opinion soured on nuclear technology. It wouldn’t work now for the cult founder to use the N-word in his phony résumé, so today if he wants to impress the rubes by professing expertise in branches of science which haven’t become that familiar yet, he could call himself an expert in fields with the words “cognitive,” “evolutionary” or “Bayesian” in them.

Sound like anyone we know?

These attacks on Eliezer miss the bigger picture. It is the cumulative effect of advances in computer science, cognitive science, computer technology, and neuroscience which is creating the conditions for a “singularity”. That is a situation that would still exist, even if Eliezer converted to esoteric Islam and went to live out the rest of his life in Qom. He didn’t create this situation, and you can’t wish it away by attacking his credibility.

It ought to be obvious that if you have autonomous artificial intelligences, we need to be concerned about their actions, and about the possibility that their intelligence will permit them to be more than humanly competent in planning and executing these actions. And let’s see… who is best known for raising this as a problem in the real world we actually inhabit, and not just depicting it as a problem in science fiction (of course Asimov was the leader in the latter respect)… Yes, it’s Eliezer Yudkowsky, the guy who created a name for the solution, “Friendly AI”.

Just having names for problems and their solutions is incredibly important. If you don’t even have a name for it, chances are you can hardly think about it. Just for inventing the name alone, Eliezer has made more of a contribution to solving the problem than 99% of humanity ever will. But of course his contributions aren’t limited to a phrase. If anything, his “problem” is that he has too much energy and too many ideas. He may yet be overtaken in relevance by others who were more disciplined, and less interested (or just less capable) of drawing a crowd. But the situation which he has structured his life to address is not going to go away.

> . . .benefiting from the popular sentiment behind the TED tide that

> rises all faux-intellectualist boats is because they, like me or

> anyone, know how to regurgitate facts. . .

>

> [T]his phenomenon might reveal something about just how people

> could react to a Wiki-bot capable of white-noise philosophical musings

> about things that don’t exist. . .

No, there are other necessary ingredients — **emotional** ingredients –

including a usually unexamined and unacknowledged dynamic between guru and

follower(s), that are required to found a cult (and not just to be

invited to appear on a stage at TED).

The guru really needs to to have an irrational degree of self confidence,

and an attitude of overweening haughtiness that would engender mockery

in an unsusceptible audience, but for individuals susceptible to

the “guru whammy” elicits an emotion that can be as intense as romantic

love. (See, for example, Andre Van Der Braak’s _Enlightenment Blues_.)

>It is the cumulative effect of advances in computer science, cognitive science, computer technology, and neuroscience which is creating the conditions for a “singularity”. That is a situation that would still exist, even if Eliezer converted to esoteric Islam and went to live out the rest of his life in Qom. He didn’t create this situation, and you can’t wish it away by attacking his credibility.

What “situation,” Mitchell? Computing shows signs of running into the Great Stagnation along with other fields of engineering. Google has $50 billion in the bank (which probably earns effectively zero or negative interest, thanks to Ben Bernanke) that the company it doesn’t know what to do with because, as Peter Thiel argues, its decision-makers don’t have any breakthrough new technologies to spend it on.

>Just having names for problems and their solutions is incredibly important. If you don’t even have a name for it, chances are you can hardly think about it.

Yeah, just like coining the word “nanomedicine” has resurrected the cryonauts and made us all immortal supermen by now. What nonsense. This attitude shows classic magical thinking. (Kind of ironic for a cult based on “rationality”!):

http://en.wikipedia.org/wiki/Magical_thinking#Other_forms

“Bronisław Malinowski, Magic, Science and Religion (1954) discusses another type of magical thinking, in which words and sounds are thought to have the ability to directly affect the world. This type of wish fulfillment thinking can result in the avoidance of talking about certain subjects (“speak of the devil and he’ll appear”), the use of euphemisms instead of certain words, or the belief that to know the “true name” of something gives one power over it, or that certain chants, prayers, or mystical phrases will bring about physical changes in the world. More generally, it is magical thinking to take a symbol to be its referent or an analogy to represent an identity.”

> Google has $50 billion in the bank … that the company … doesn’t know what to do with

Eric Schmidt declined to agree with Thiel’s assessment.

> “just like coining the word “nanomedicine” has resurrected the cryonauts and made us all immortal supermen by now”

In my part of the world, it served to get all these people together a few months ago:

http://www.oznanomed.org/

I can think of all kinds of things to spend some of that $50 billion on (although it would not require anything close to this amount). There is all kinds of biotech and 3D printing/additive manufacturing technologies to develop. There is LENR (which I’m convinced is real), not to mention the “hot” fusion start-ups, such as Tri-Alpha and EMC2. Paul Allen has put $40 million into Tri-Alpha (B11-H fusion start-up).

Lastly, there is the Woodward-Mach propulsion that also appears to be real.

Or even just ordinary thorium power plants, assuming that Google could get around the legal prohibitions.

One of the blogs I follow argues that America’s ruling class depends on wealth tied to fossil fuels for its support. The nuclear power industry makes the ruling class uneasy because it shifts status to people who earn it through diligence, good character & cognitive ability, even if they come from the gutter, instead of the ones who inherit family fortunes and go to Ivy League schools. As Ayn Rand might have said, nuclear power belongs to the Men of the Mind.

LENR = much ado about a measuring error. I’d prefer to bet on the acronyms ITER and DEMO instead. Despite the intriguing mathematical underpinning, I don’t know of any empirical basis for the claim that the Woodward effect “appears to be real.”

> . . .to know the “true name” of something gives one power over it. . .

>

> More generally, it is magical thinking to take a symbol to be its

> referent or an analogy to represent an identity.

Which makes it somewhat ironic that Yudkowsky credits (or credited,

15 years ago in a post on the Extropians’ list) his initial decision

to “devote his life to bringing about the Singularity” to reading

Vernor Vinge’s _True Names_.

Robert Ettinger articulated many of the key ideas of today’s transhumanism in his 1972 book, Man Into Superman, though without the current emphasis on computing, in his effort to present cryonics as part of a bigger revolution in world views. His name for this outlook, the New Meliorism, never caught on, however. Specifically Ettinger writes:

http://www.cryonics.org/chap11_1.html